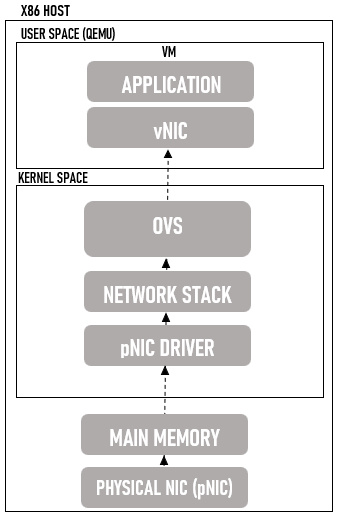

In non-virtualized environments, the data traffic is received by the physical NIC (pNIC) and is sent to an application in the user space via the kernel space. However, in a virtual environment or VNF network, there are pNICs, virtual NICs (vNICs), a hypervisor, and a virtual switch in between.

The hypervisor and the virtual switch take the data from the pNIC and then send it to the vNIC of the Virtual Machine or the Virtual Network Function (VNF), then to the application. The virtual layer causes virtualization overhead and additional packet processing that reduces I/O packet throughput and builds ups bottlenecks.

VNF Network Packet Flow

The image below shows the packet flow for a virtualized system using an Open vSwitch (OVS) architecture:

The packets received by the pNIC go through various steps before transmitting to the Virtual Machines’ applications. These steps are as follows:

- The pNIC receives the data traffic and places it in an Rx queue (ring buffers).

- The pNIC forwards the packet to the main memory buffer via Direct Memory Access (DMA). The packet comes with a packet descriptor which includes the memory location and packet size.

- The pNIC sends an Interrupt Request (IRQ) to the CPU.

- The CPU passes the control to the pNIC driver that services the IRQ. The pNIC driver receives the packet and moves it into the network stack. Then, the packet gets into a socket and is placed into a socket receive buffer.

- The packet is copied to the Open vSwitch (OVS) virtual switch.

- The packet is processed by the OVS and is then sent to the VM. The packet switches between the kernel and user space, which consumes extensive CPU cycles.

- The packet is received by the vNIC and is placed in an Rx queue.

- The vNIC forwards the packet and its packet descriptor to the virtual memory buffer via DMA.

- The vNIC sends an IRQ to the virtual CPU (vCPU).

- The vCPU passes the control to the vNIC driver that services the IRQ. It then receives the packet and moves it into the network stack. Finally, the packet arrives in a socket and is placed into a socket receive buffer.

- The packet data is then copied and sent to the VM application.

Each packet received must go through the same procedure, which demands the CPU to be interrupted frequently. Interrupts add a significant amount of overhead, and it affects the overall Virtualized Network Function performance.

Multiple I/O technologies are developed to optimize the VNF network by avoiding the virtualization overhead and increasing the packet throughput. However, these I/O technologies require physical Network Interface Cards (NICs) to be implemented.

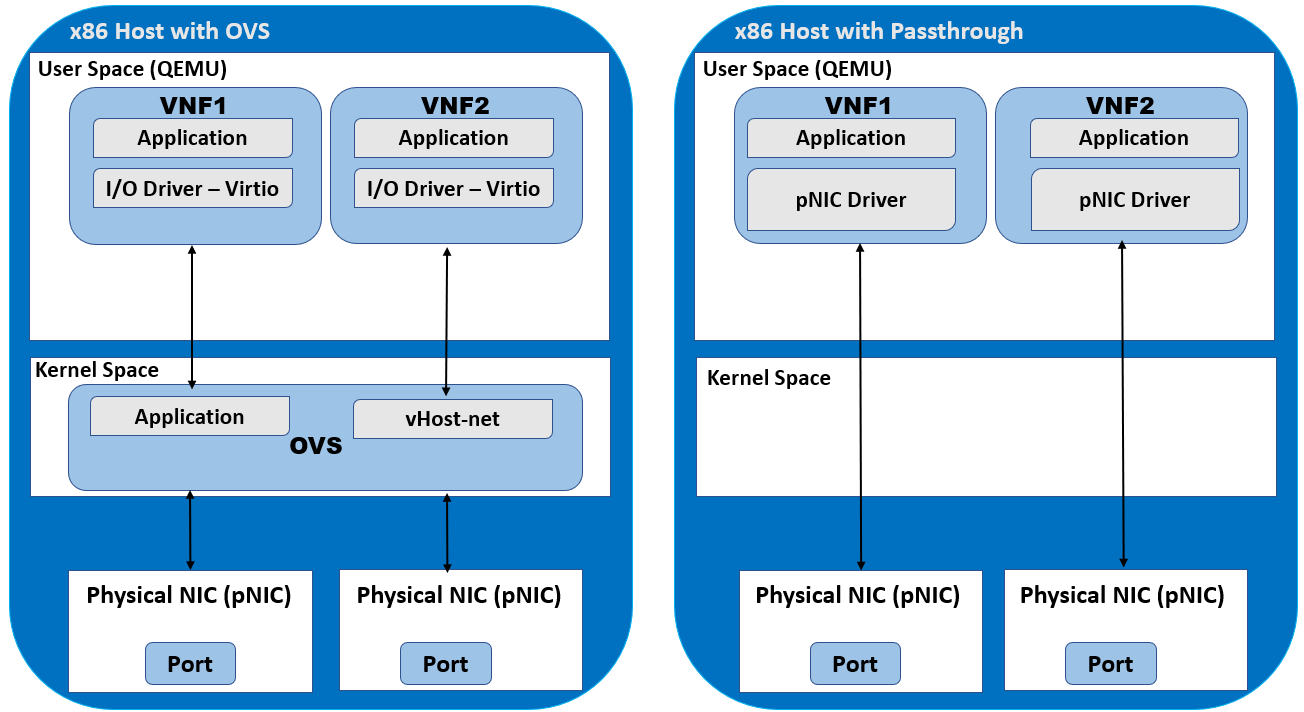

PCI Passthrough

Peripheral Component Interconnect (PCI) passthrough gives VNFs direct access to physical PCI devices that seem and behave as if they were physically connected to the VNF. PCI passthrough can be used to map a single pNIC to a single VNF, making the VNF appear to be directly connected to the pNIC.

Using PCI passthrough provides performance advantages, such as:

- One-to-One Mapping

- Bypassed Hypervisor

- Direct Access to I/O Resources

- Increased I/O Throughput

- Reduced CPU Utilization

- Reduced System Latency

However, using PCI passthrough dedicates an entire pNIC to a single VNF. It cannot be shared with other Virtual Network Functions (VNFs). Therefore, it limits the number of VNFs to the number of pNICs in the system.

The image below shows the difference between a standard OVS and PCI passthrough.

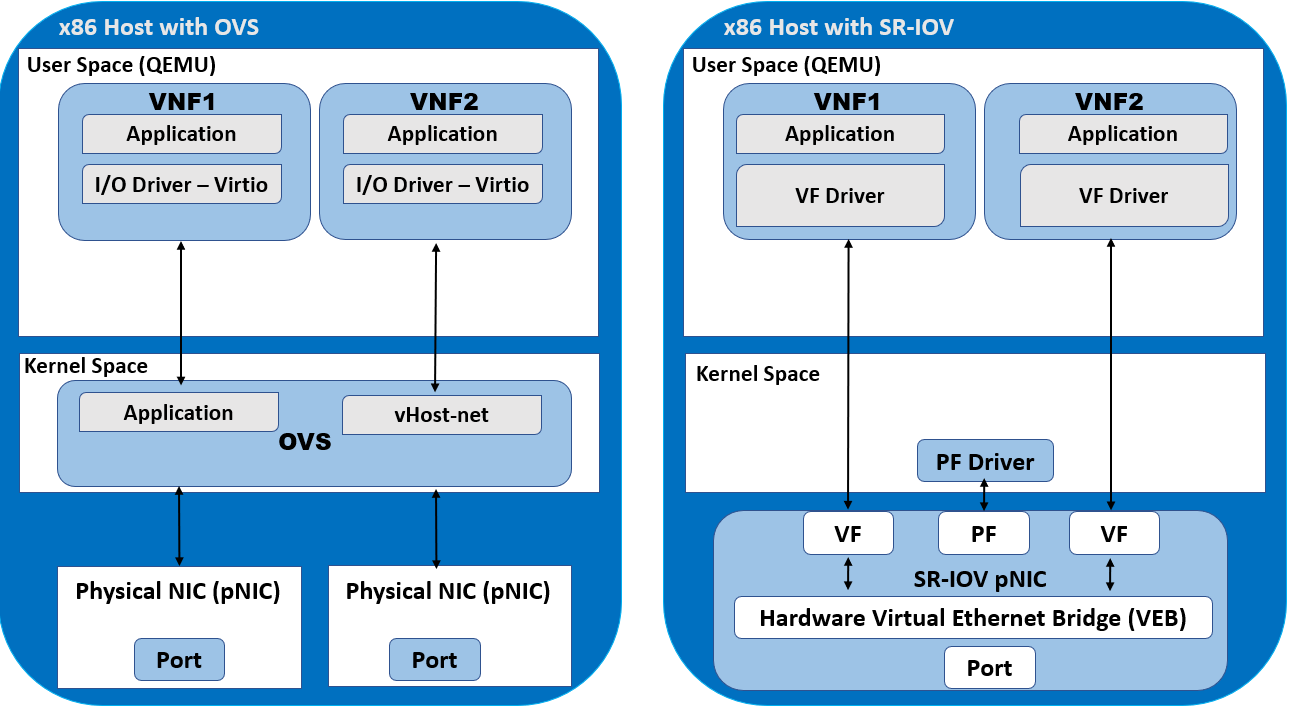

Single-Root I/O Virtualization (SR-IOV)

SR-IOV is a PCI passthrough feature that enables multiple VNFs to share the same pNIC. SR-IOV emulates multiple Peripheral Component Interconnect Express (PCIe) devices, such as pNICs, on a single PCIe device.

The emulated PCIe device is referred to as Virtual Function (VF), and the physical PCIe device is referred to as Physical Function (PF). Physical Functions have full PCIe features. Virtual Functions have simple PCIe functions that process I/O only. Using PCI passthrough technology, the VNFs have direct access to the VFs.

There are two SR-IOV-enabled pNIC modes for VNF traffic switching:

- Virtual Ethernet Bridge (VEB) – the pNIC directly switches the traffic between VNFs.

- Virtual Ethernet Port Aggregator (VEPA) – an external switch switches the traffic between VNFs.

The image below shows the difference between a standard OVS and SR-IOV.

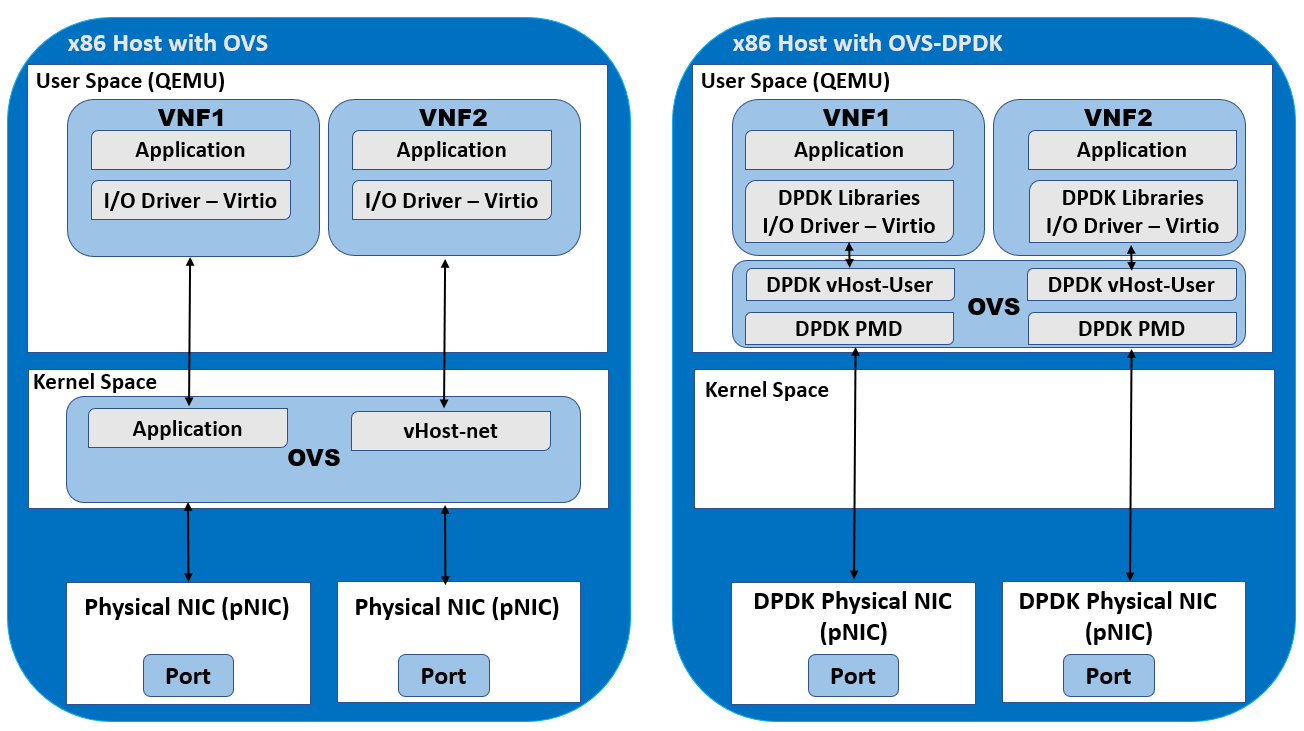

OVS Data Plane Development Kit (OVS-DPDK)

Open vSwitch (OVS) was upgraded with the Data Plane Development Kit (DPDK) libraries to address the performance impact of interruptions on packet throughput because of interrupts. OVS-DPDK operates in the user space. The OVS-DPDK Poll Mode Driver (PMD) polls and processes the data coming into the pNIC. It bypasses the whole kernel space since it wouldn’t also need to send an IRQ to the CPU when a packet is received.

DPDK PMD needs one or more CPU cores dedicated to polling and processing incoming data to accomplish this. When the packet enters the OVS, it is already in the user space. Therefore, it can be directly switched to the VNF and would significantly improve performance.

The image below shows the difference between a standard OVS and OVS-DPDK.

Download our Free CCNA Study Guide PDF for complete notes on all the CCNA 200-301 exam topics in one book.

We recommend the Cisco CCNA Gold Bootcamp as your main CCNA training course. It’s the highest rated Cisco course online with an average rating of 4.8 from over 30,000 public reviews and is the gold standard in CCNA training: